Chemoinformatic Analysis of Natural Product Libraries: Accelerating Modern Drug Discovery

This article provides a comprehensive overview of the application of chemoinformatics in the analysis of natural product (NP) libraries for drug discovery.

Chemoinformatic Analysis of Natural Product Libraries: Accelerating Modern Drug Discovery

Abstract

This article provides a comprehensive overview of the application of chemoinformatics in the analysis of natural product (NP) libraries for drug discovery. It explores the foundational role of NPs as sources of bioactive compounds and unique molecular scaffolds. The piece details key methodological approaches for profiling NP databases, including physicochemical property analysis, fragment-based design, and chemical space visualization. It further addresses current challenges in data curation and AI integration, offering troubleshooting and optimization strategies. Finally, it presents a comparative analysis of NP libraries against synthetic compounds and discusses the validation of their drug-like properties and chemical diversity, synthesizing key findings to outline future directions for the field.

The Enduring Role of Natural Products in Drug Discovery

Historical Foundations of Natural Product Medicine

Natural products, often referred to as secondary metabolites, represent the most successful source of potential drug leads in history [1]. These compounds are not essential for the growth, development or reproduction of an organism but are produced as a result of the organism adapting to its surrounding environment or as a defense mechanism against predators [1]. The biosynthesis of secondary metabolites is derived from fundamental processes including photosynthesis, glycolysis and the Krebs cycle, which afford biosynthetic intermediates that ultimately lead to the formation of natural products with immense structural diversity [1].

The medicinal use of natural products dates back to ancient civilizations, with the earliest records depicted on clay tablets in cuneiform from Mesopotamia (2600 B.C.) documenting oils from Cupressus sempervirens (Cypress) and Commiphora species (myrrh) which are still used today to treat coughs, colds, and inflammation [1]. The Ebers Papyrus (2900 B.C.), an Egyptian pharmaceutical record, documents over 700 plant-based drugs ranging from gargles, pills, infusions, to ointments [1]. Similarly, the Chinese Materia Medica (1100 B.C.), Shennong Herbal (~100 B.C.), and the Tang Herbal (659 A.D.) provide extensive documentation of natural product uses [1].

Historically significant natural products have formed the basis of many modern therapeutics. The anti-inflammatory agent acetylsalicyclic acid (aspirin) was derived from salicin isolated from the bark of the willow tree Salix alba L. [1]. Investigation of Papaver somniferum L. (opium poppy) resulted in the isolation of several alkaloids including morphine, first reported in 1803, which became a commercially important drug [1] [2]. These early discoveries established the foundation for natural product-based drug development.

Table 1: Historical Documentation of Natural Product Medicines

| Era/Period | Document/Source | Key Natural Product Information |

|---|---|---|

| Mesopotamia (2600 B.C.) | Clay tablets in cuneiform | Oils from Cupressus sempervirens (Cypress) and Commiphora species (myrrh) for coughs, colds, inflammation |

| Egypt (2900 B.C.) | Ebers Papyrus | Over 700 plant-based drugs (gargles, pills, infusions, ointments) |

| China (1100 B.C.) | Wu Shi Er Bing Fang (Materia Medica) | 52 prescriptions documenting natural product uses |

| China (~100 B.C.) | Shennong Herbal | 365 drugs from natural sources |

| China (659 A.D.) | Tang Herbal | 850 drugs systematically documented |

| Greece (100 A.D.) | Dioscorides records | Collection, storage, and uses of medicinal herbs |

Traditional medicinal practices across cultures have extensively utilized natural products. The plant genus Salvia was used by Indian tribes of southern California as an aid in childbirth, while Alhagi maurorum Medik (Camels thorn) was documented by Ayurvedic practitioners to treat anorexia, constipation, dermatosis, and other conditions [1]. Ligusticum scoticum Linnaeus found in Northern Europe was believed to protect from daily infection and served as an aphrodisiac and sedative [1]. Interestingly, some naturally occurring substances like Atropa belladonna Linnaeus (deadly nightshade) were recognized for their poisonous nature and excluded from folk medicine compilations [1].

Beyond terrestrial plants, other organisms have provided valuable therapeutic agents. The fungus Piptoporus betulinus, which grows on birches, was steamed to produce charcoal valued as an antiseptic and disinfectant, while strips of this fungus were used for staunching bleeding [1]. Lichens have been used as raw materials for perfumes, cosmetics, and medicine since early Chinese and Egyptian civilizations, with Usnea species traditionally used for scalp diseases and still sold in anti-dandruff shampoos [1]. The marine environment, though less documented in traditional medicine, includes examples such as red algae Chondrus crispus and Mastocarpus stellatus used as folk cures for colds, sore throats, and chest infections including tuberculosis [1].

Natural Products in Modern Drug Discovery

Natural products and their structural analogues have historically made a major contribution to pharmacotherapy, especially for cancer and infectious diseases [3]. Approximately 40% of drugs approved by the FDA during recent decades are natural products, their derivatives, or synthetic mimetics related to natural products [4]. Among successful therapeutic agents, higher plants have remained one of the major sources of modern drugs, with over 25% of all FDA and/or European Medical Agency (EMA) approved drugs being of plant origin [2]. The vast majority of successful anticancer drugs and antibiotics originate from natural sources, with antibiotics mainly derived from microbial sources such as penicillins from Penicillium spp. and tetracyclines from Streptomyces aureofaciens [2].

Table 2: Therapeutic Applications of Natural Products in Modern Medicine

| Therapeutic Area | Key Natural Product Drugs | Natural Source | Clinical Application |

|---|---|---|---|

| Analgesia | Morphine, Codeine | Papaver somniferum (opium poppy) | Narcotic analgesic for pain management |

| Cancer | Paclitaxel | Taxus brevifolia | Anticancer drug |

| Vinblastine, Vincristine | Catharanthus roseus | Anticancer drugs | |

| Doxorubicin | Streptomyces peucetius | Anticancer drug | |

| Infectious Diseases | Penicillins | Penicillium spp. | Antibiotic |

| Cephalosporins | Acremonium spp. | Antibiotic | |

| Artemisinin | Artemisia annua | Antimalarial | |

| Quinine | Cinchona tree bark | Antimalarial | |

| Immunosuppression | Cyclosporine | Tolypocladium inflatum | Immunosuppressant |

Despite their historical success, natural products present challenges for drug discovery, including technical barriers to screening, isolation, characterization, and optimization, which contributed to a decline in their pursuit by the pharmaceutical industry from the 1990s onwards [3]. However, in recent years, several technological and scientific developments—including improved analytical tools, genome mining and engineering strategies, and microbial culturing advances—are addressing these challenges and opening up new opportunities [3]. Consequently, interest in natural products as drug leads is being revitalized, particularly for tackling antimicrobial resistance [3].

The structural diversity of natural products presents unique advantages compared to standard combinatorial chemistry. Natural products tend to have more sp³-hybridized bridgehead atoms, more chiral centers, a higher oxygen content but lower nitrogen one, a higher molecular weight, a higher number of H-bond donors and acceptors, lower cLogP values, and higher molecular rigidity, and preferably aliphatic rings over aromatic ones [4]. These characteristics contribute to their success as drug candidates, with as many as 20% of natural products lying in the chemical space beyond Lipinski's "Rule of Five" (Ro5) while still demonstrating therapeutic potential for life-threatening diseases such as HIV, cancer, and cardiovascular conditions [4].

Chemoinformatic Analysis of Natural Product Libraries

Chemoinformatic approaches have become essential tools for analyzing and designing natural product-like compound libraries. Analysis of natural product chemical space reveals distinct properties compared to synthetic compounds. Natural products generally exhibit greater structural complexity, with higher numbers of stereogenic centers and increased molecular rigidity [4]. These characteristics make them particularly valuable for probing complex biological systems and protein-protein interactions where traditional small molecules often fail.

The design of natural product-like compound libraries typically employs two main approaches: similarity-based filtering and substructure analysis. Similarity-based methods apply 2D fingerprint similarity filtering against known natural compound scaffolds, typically using a Tanimoto similarity cut-off (e.g., 85%) to identify structurally diverse compounds with natural product-like characteristics [4]. Substructure analysis involves searching for natural-like scaffolds and relevant functional groups in compound collections, focusing on structural motifs such as coumarins, flavonoids, aurones, alkaloids, and other natural product-derived frameworks [4].

Table 3: Chemical Space Descriptors of Natural Products vs. Synthetic Compounds

| Molecular Descriptor | Pure Natural Products (PNP) | Semi-synthetic NPs (SNP) | Natural Product-like Compounds |

|---|---|---|---|

| Molecular Weight (MW) | 393.9 | 409.2 | 389.2 |

| Heavy Atom Count (HAC) | 28.2 | 29.1 | 27.7 |

| ClogP | 2.3 | 3.7 | 3.6 |

| H-bond Donors | 2.7 | 1.4 | 1.4 |

| H-bond Acceptors | 6.6 | 6.4 | 4.2 |

| Topological Polar Surface Area (TPSA) | 98.9 | 83.2 | 79.8 |

| Ring Count | 3.6 | 3.5 | 3.9 |

| Rotatable Bonds | 5.2 | 6.1 | 5.0 |

| Number of Chiral Atoms | 5.5 | 1.4 | 1.3 |

Natural product-likeness scoring represents an important advancement in the selection and optimization of natural product-like drugs and synthetic bioactive compounds. These computational methods evaluate compounds based on the sum frequency of certain molecular fragments among known natural products and small molecules [4]. The scoring enables prioritization of compound libraries for screening campaigns focused on identifying leads with natural product-like properties.

Recent research has expanded to include fragment libraries derived from large natural product databases. Comprehensive fragment libraries obtained from updated natural product collections such as the Collection of Open Natural Products (COCONUT) with more than 695,133 non-redundant natural products, and the Latin America Natural Product Database (LANaPDB) with 13,578 unique natural products from Latin America, provide valuable resources for fragment-based drug discovery [5]. Comparative chemoinformatic analysis of these natural product-derived fragments with synthetic fragment libraries reveals differences in chemical space coverage and diversity, offering insights for library design strategies [5].

Chemoinformatic Analysis Workflow

Advanced Methodologies and Experimental Protocols

AI-Driven Prediction of Experimental Procedures

Recent advances in artificial intelligence have enabled the development of models that convert chemical equations to fully explicit sequences of experimental actions for batch organic synthesis [6]. The Smiles2Actions model represents a significant breakthrough in this area, using sequence-to-sequence models based on Transformer and BART architectures to predict the entire sequence of synthesis steps starting from a textual representation of a chemical equation [6]. This approach addresses the critical bottleneck in chemical synthesis where proposed synthetic routes must be converted to executable experimental procedures.

The prediction task involves processing SMILES representations of chemical equations to generate sequences of synthesis actions, with each action consisting of a type with associated properties specific to the action type [6]. These actions cover the most common batch operations for organic molecule synthesis and contain all required information to reproduce a chemical reaction in a laboratory. The format includes actions such as ADD, STIR, FILTER, HEAT, COOL, and RECRYSTALLIZE, with associated parameters for compounds, durations, and temperatures [6].

Table 4: Action Types for Experimental Procedure Prediction

| Action Type | Associated Properties | Function in Experimental Protocol |

|---|---|---|

| ADD | Compound identifier, amount | Addition of reactants, reagents, or solvents |

| STIR | Duration, temperature | Mixing of reaction mixture |

| HEAT | Target temperature | Application of heat to reaction |

| COOL | Target temperature | Cooling of reaction mixture |

| FILTER | Phase to keep (precipitate or filtrate) | Separation of solids from liquids |

| EXTRACT | Solvent, phase to keep | Liquid-liquid extraction |

| WASH | Solvent | Washing of solids or liquids |

| DRY | Agent (e.g., over MgSO₄) | Removal of water from organic phase |

| RECRYSTALLIZE | Solvent system | Purification by recrystallization |

| YIELD | Compound identifier | Collection of final product |

To improve training performance, computational models incorporate restrictions on allowed values for specific properties. For compound names, tokens representing the position of the corresponding molecule in the reaction input are used whenever possible, allowing models to focus on instruction patterns rather than naming conventions [6]. For numerical values like temperatures and durations, predefined ranges are tokenized instead of using exact values, as reaction success typically depends on adequate ranges rather than precise values [6].

Analytical and Dereplication Techniques

Modern natural product research employs advanced analytical techniques for metabolite identification and dereplication. High-performance liquid chromatography coupled with high-resolution mass spectrometry (LC-HRMS) and nuclear magnetic resonance (NMR) spectroscopy provide powerful tools for the comprehensive study of natural product extracts [3]. These technologies enable researchers to rapidly identify known compounds and focus discovery efforts on novel chemical entities.

Dereplication strategies combine chromatographic separation with spectroscopic detection to avoid rediscovery of known compounds. State-of-the-art approaches utilize ultra-high pressure liquid chromatography (UHPLC) for crude plant extract profiling, coupled with mass spectrometry and NMR for structural characterization [3]. Automated open-access liquid chromatography high resolution mass spectrometry systems support drug discovery projects by providing rapid analysis of natural product extracts [3].

Metabolomic profiling has emerged as a key strategy in natural product research, enabling the comprehensive study of metabolite pools in biological systems. This approach combines analytical chemistry techniques with multivariate statistical analysis to identify differential metabolites in complex natural extracts [1]. By integrating metabolomic data with genomic information, researchers can gain insights into biosynthetic pathways and optimize production of valuable natural products.

Table 5: Key Research Reagent Solutions for Natural Product Research

| Resource Category | Specific Tools/Databases | Function/Application |

|---|---|---|

| Natural Product Databases | COCONUT (Collection of Open Natural Products) | Access to >695,000 non-redundant natural products for virtual screening and chemoinformatic analysis [5] |

| LANaPDB (Latin America Natural Product Database) | Specialized database of 13,578 unique natural products from Latin American biodiversity [5] | |

| Fragment Libraries | CRAFT Library | 1,214 fragments based on novel heterocyclic scaffolds and natural product-derived chemicals [5] |

| NP-derived Fragment Libraries | 2,583,127 fragments derived from COCONUT database for fragment-based drug discovery [5] | |

| Screening Libraries | Natural Product-like Compound Library | >15,000 synthetic compounds with structural similarity to natural products for HTS and HCS [4] |

| Analytical Tools | LC-HRMS-NMR Hyphenated Systems | Combined liquid chromatography-high resolution mass spectrometry-NMR for metabolite identification and dereplication [3] |

| Computational Tools | Natural Product-likeness Calculator | Evaluation of compound natural product-likeness based on frequency of molecular fragments [4] |

| Smiles2Actions Models | AI-driven prediction of experimental procedures from chemical equations [6] |

Natural Product Research Resource Ecosystem

The integration of these resources creates a powerful ecosystem for natural product-based drug discovery. Database resources provide the foundational chemical information necessary for virtual screening and chemoinformatic analysis [5]. Physical screening libraries, whether based on natural products, natural product-like compounds, or fragments, enable experimental validation of computational predictions [4]. Advanced analytical tools facilitate structural characterization and dereplication, accelerating the identification of novel bioactive compounds [3]. Finally, computational algorithms and AI models create a feedback loop that informs the design of improved libraries and experimental approaches [6].

This toolkit continues to evolve with technological advancements. Recent developments in genome mining, microbial culturing techniques, and synthetic biology approaches are expanding access to previously inaccessible natural products [3]. As these resources mature, they promise to enhance the efficiency and success rate of natural product-based drug discovery, addressing unmet medical needs through the unique structural diversity offered by natural products.

Natural products (NPs) have historically been the most significant source of bioactive compounds for medicinal chemistry. From 1981 to 2019, approximately 64.9% of the 185 small molecules approved to treat cancer were unaltered natural products or synthetic drugs containing a natural product pharmacophore [7] [8]. This therapeutic potential, combined with the unique structural complexity of NPs, has driven the development of specialized databases to organize their chemical information for computational research. These databases serve as crucial resources for computer-aided drug design (CADD), enabling virtual screening, chemoinformatic analysis, and the training of artificial intelligence algorithms [7]. The systematic organization of natural products into searchable, annotated collections allows researchers to navigate the vast chemical space of biological compounds efficiently, facilitating structure-activity relationship studies and the identification of novel drug candidates [7] [9].

This technical guide provides an in-depth analysis of key public natural product databases, with particular focus on the global COCONUT resource and region-specific collections such as the Latin American Natural Products Database (LANaPDB). Within the broader context of chemoinformatic analysis of natural product libraries, we characterize their contents, describe standard methodologies for their analysis, and illustrate their complementary roles in natural product-based drug discovery. The databases discussed herein are unified by their open access nature, making them particularly valuable for the research community.

Comprehensive Database Profiles

COCONUT: A Global Open Resource

The COlleCtion of Open Natural prodUcTs (COCONUT) is one of the largest and most comprehensive open-access natural product databases available. Launched in 2021 and substantially overhauled in its 2.0 version, COCONUT serves as an aggregated dataset of elucidated and predicted NPs collected from numerous open sources worldwide [10] [11]. Its mission is to provide a unified platform that simplifies natural product research and enhances computational screening and other in silico applications [12].

Key Features: COCONUT contains over 695,000 unique natural product structures, including 82,220 molecules without stereocenters, 539,350 molecules with defined stereochemistry, and 73,563 molecules with stereocenters but undefined absolute stereochemistry [9]. The database is openly accessible online and provides multiple search capabilities, including textual information search and structure, substructure, and similarity searches [10] [11]. All data in COCONUT is available for bulk download in SDF, CSV, and database dump formats, facilitating integration with other structural feature-based databases for dereplication purposes [9]. A key feature of COCONUT 2.0 is its support for community curation and data submissions, enhancing the database's comprehensiveness and accuracy over time [10].

LANaPDB: A Regional Collaborative Effort

The Latin American Natural Products Database (LANaPDB) represents a collective effort from researchers across several Latin American countries to create a public compound collection gathering chemical information from this biodiversity-rich geographical region [7] [8]. The database unifies natural product information from six countries and in its first version contained 12,959 curated chemical structures [7]. A more recent update indicates the database has grown to 13,579 compounds [13].

Structural Composition: Analysis of LANaPDB's chemical composition reveals a distinct profile dominated by specific natural product classes: terpenoids constitute the most abundant class (63.2%), followed by phenylpropanoids (18%) and alkaloids (11.8%) [7] [8]. This structural distribution reflects the unique botanical sources and metabolic pathways characteristic of Latin American biodiversity. The database was constructed through a collaborative network spanning research institutions in Mexico, Costa Rica, Peru, Brazil, Panama, and El Salvador, representing a significant achievement in regional scientific cooperation [8].

Regional and Specialized Natural Product Databases

Beyond these larger collections, several regional and specialized databases have emerged to capture chemical diversity from specific geographical areas or research foci:

Nat-UV DB: This database represents the first natural products database from a coastal zone of Mexico (Veracruz state) and contains 227 compounds characterized from 1970 to 2024 [13]. Notably, these compounds contain 112 scaffolds, of which 52 are not present in previous natural product databases, highlighting the value of exploring underrepresented biodiversity-rich regions [13].

BIOFACQUIM: A Mexican compound database focused on natural products isolated and characterized in Mexico, containing 531 compounds [14] [13].

UNIIQUIM: Another Mexican natural products database with 855 compounds, complementing the coverage of BIOFACQUIM [13].

Table 1: Key Characteristics of Major Public Natural Product Databases

| Database | Scope | Number of Compounds | Key Features | Access |

|---|---|---|---|---|

| COCONUT | Global | >695,000 [9] | Largest open-access NP database; community curation; extensive search capabilities | Online portal; bulk download [10] [12] |

| LANaPDB | Latin America | 13,579 [13] | Regional focus; high terpenoid content (63.2%); collaborative network | Public compound collection [7] |

| Nat-UV DB | Veracruz, Mexico | 227 [13] | Unique scaffolds (52 not in other DBs); coastal biodiversity focus | First version; specialized regional coverage [13] |

| BIOFACQUIM | Mexico | 531 [13] | Mexican NP focus; used for chemoinformatic method development | Publicly accessible [14] [13] |

| UNIIQUIM | Mexico | 855 [13] | Complementary Mexican NP coverage; curated collection | Publicly accessible [13] |

Chemoinformatic Characterization of Natural Product Databases

Physicochemical Property Analysis

Standard chemoinformatic characterization of natural product databases involves calculating key physicochemical properties relevant to drug discovery. These analyses help researchers understand how natural products compare with approved drugs and synthetic compounds in chemical space.

Key Physicochemical Parameters: The most commonly calculated properties include Molecular Weight (MW), octanol/water partition coefficient (ClogP), Polar Surface Area (PSA), Number of Rotatable Bonds (RB), Hydrogen Bond Donors (HBD), and Hydrogen Bond Acceptors (HBA) [13]. These parameters inform researchers about a molecule's likely oral bioavailability, membrane permeability, and overall drug-likeness according to established rules such as Lipinski's Rule of Five [7].

Property Distribution Patterns: Studies comparing LANaPDB with FDA-approved drugs have revealed that many Latin American natural products satisfy drug-like rules of thumb for physicochemical properties [7] [8]. Similarly, analysis of the Nat-UV DB database showed that its compounds have similar size, flexibility, and polarity to previously reported natural products and approved drug datasets [13]. This overlap in physicochemical property space suggests strong potential for drug discovery applications.

Table 2: Typical Physicochemical Properties of Natural Product Databases Compared to Approved Drugs

| Database | Molecular Weight (Mean) | ClogP (Mean) | H-Bond Donors | H-Bond Acceptors | Rotatable Bonds | Polar Surface Area |

|---|---|---|---|---|---|---|

| LANaPDB | Data from primary literature [15] | Similar to approved drugs [7] | Similar to approved drugs [7] | Similar to approved drugs [7] | Similar to approved drugs [7] | Similar to approved drugs [7] |

| Nat-UV DB | Similar to reference NPs and drugs [13] | Similar to reference NPs and drugs [13] | Similar to reference NPs and drugs [13] | Similar to reference NPs and drugs [13] | Similar to reference NPs and drugs [13] | Similar to reference NPs and drugs [13] |

| Approved Drugs (DrugBank) | Reference values [13] | Reference values [13] | Reference values [13] | Reference values [13] | Reference values [13] | Reference values [13] |

Structural Diversity and Scaffold Analysis

Assessment of molecular scaffolds provides crucial information about the structural diversity contained within natural product databases and their potential to provide novel chemotypes for drug discovery.

Bemis-Murcko Scaffold Analysis: This approach reduces molecules to their core ring systems with linkers, enabling quantification of scaffold diversity and identification of privileged structures [13]. Analysis of LANaPDB has shown that terpenoids, phenylpropanoids, and alkaloids represent the most abundant structural classes [7] [8]. Similarly, examination of Nat-UV DB revealed 112 unique scaffolds, with 52 not found in other natural product databases, underscoring the value of exploring region-specific biodiversity [13].

Scaffold Frequency and Uniqueness: The frequency of scaffold occurrence helps identify "privileged scaffolds" - structures capable of providing useful ligands for more than one receptor [7] [8]. These privileged scaffolds can serve as core structures for constructing compound libraries around them [7]. Regional databases often contain unique scaffolds not found in global collections, highlighting their importance in expanding accessible chemical space.

Chemical Space Visualization and Diversity Assessment

Chemical Space Visualization: The concept of the "chemical multiverse" has been employed to generate multiple chemical spaces from different molecular representations and dimensionality reduction techniques [7]. This approach involves calculating molecular fingerprints (such as ECFP4), followed by dimensionality reduction using techniques like t-distributed Stochastic Neighbor Embedding (t-SNE) to visualize chemical space in two or three dimensions [13]. Comparative studies have shown that the chemical space covered by LANaPDB completely overlaps with COCONUT and, in some regions, with FDA-approved drugs [7] [8].

Consensus Diversity Plots: Researchers use consensus diversity plots to compare the chemical diversity of different compound datasets considering multiple representations simultaneously, including chemical scaffolds and fingerprint-based diversity [13]. These analyses have demonstrated that specialized regional databases like Nat-UV DB have higher structural and scaffold diversity than approved drugs but lower diversity compared to larger natural product collections [13].

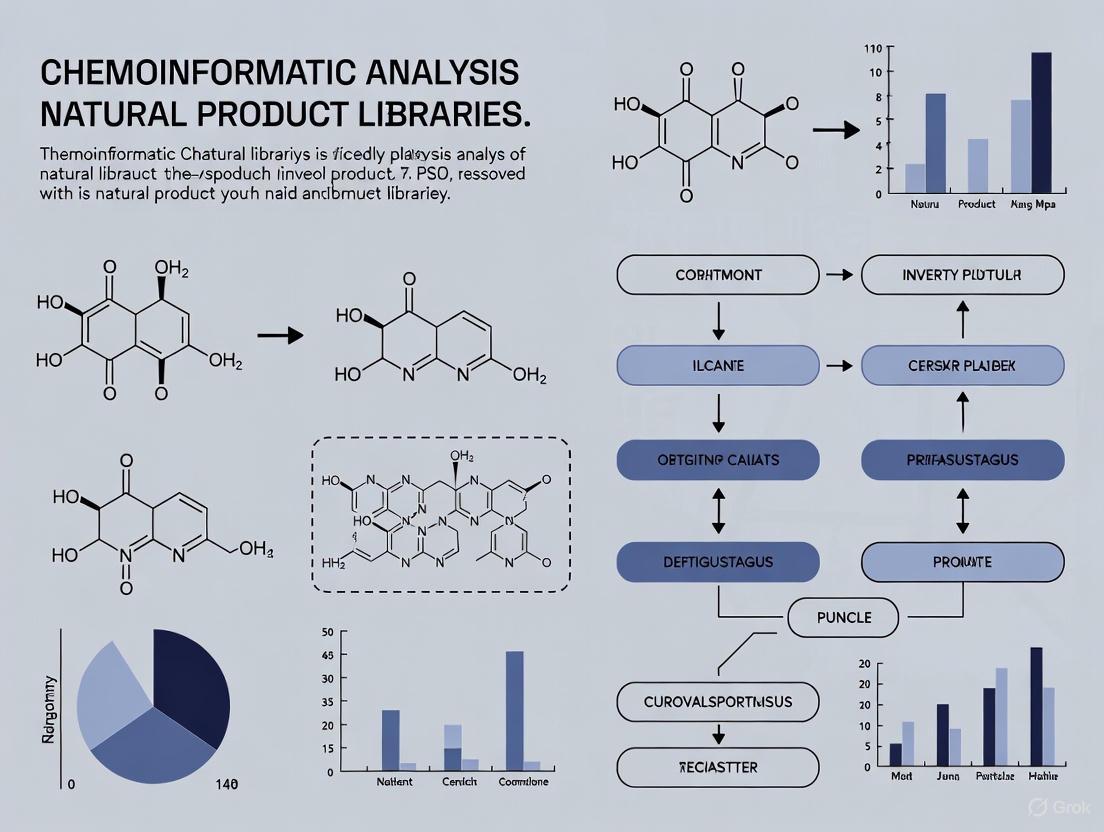

Figure 1: Chemoinformatic Characterization Workflow for Natural Product Databases

Research Protocols and Experimental Methodologies

Database Construction and Curation Protocols

The construction of reliable natural product databases requires systematic protocols for data collection, structure curation, and annotation.

Data Collection and Sourcing: Regional databases like Nat-UV DB are typically assembled through comprehensive literature searches encompassing research articles, theses, and institutional repositories [13]. For example, Nat-UV DB construction involved searching databases like PubMed, Google Scholar, Sci-Finder, and institutional repositories using keywords such as "natural product," "NMR," and the specific geographical region [13]. The inclusion criteria often require that compound identification is supported by nuclear magnetic resonance (NMR) spectroscopy and that compounds originate from specific geographical locations [13].

Structure Curation Pipeline: A standardized curation process is essential for database quality. This typically includes: generating isomeric SMILES strings while maintaining reported stereochemistry; using molecular operating environment (MOE) "Wash" functions to normalize structures; eliminating salts; adjusting protonation states; and removing duplicate molecules [13]. Additionally, manual cross-referencing with established databases like PubChem and ChEMBL enables annotation with associated bioactivities [13].

Virtual Screening and Bioactivity Prediction

Natural product databases serve as primary resources for virtual screening campaigns aimed at identifying novel bioactive compounds.

Structure-Based Virtual Screening (SBVS): When the three-dimensional structure of a target protein is available, molecular docking can be employed to screen natural product databases against specific biological targets [7] [9]. For instance, researchers have explored the immuno-oncological activity of NPs targeting the PD-1/PD-L1 immune checkpoint by estimating half maximal inhibitory concentration (IC₅₀) through molecular docking scores [9].

Ligand-Based Virtual Screening (LBVS): When the target structure is unknown, ligand-based approaches such as Quantitative Structure-Activity Relationship (QSAR) models and similarity searching are employed [7]. Recent advances include building QSAR classification models using machine learning techniques like LightGBM (Light Gradient-Boosted Machine), which has shown effectiveness in predicting biological activity from chemical structures [9].

AI-Enhanced Approaches: Artificial intelligence algorithms are increasingly applied to natural product drug discovery, including data-mining traditional medicines, predicting chemical structures from genomes, and de novo generation of natural product-inspired compounds [7]. AI-based scoring functions for molecular docking have demonstrated improved performance in benchmark studies [7].

Spectral Data Analysis and QSDAR Approaches

Quantitative Spectrometric Data-Activity Relationships (QSDAR): This emerging approach predicts biological activity directly from spectral data, particularly NMR spectra, without requiring complete structure elucidation [9]. Machine learning models can classify bioactivity from the predicted ¹H and ¹³C NMR spectra of pure compounds using tools like the SPINUS program [9]. This strategy has been applied to discover new inhibitors against cancer cell lines and antibiotic-resistant pathogens [9].

Spectral-Structure Integration: Advanced approaches use graph neural network (GNN) models to predict NMR chemical shifts, enabling the construction of models that connect spectral features to bioactivity [9]. While generally having lower predictive power than QSAR, QSDAR approaches offer the significant advantage of not requiring complete structural determination of compounds [9].

Table 3: Essential Tools for Natural Product Database Research

| Tool/Resource | Type | Primary Function | Application Example |

|---|---|---|---|

| KNIME Analytics Platform [14] | Data Analytics Platform | Workflow-based data processing and analysis | Chemoinformatic characterization of compound databases [14] |

| Molecular Operating Environment (MOE) [13] | Molecular Modeling Software | Structure curation and normalization | Database washing, protonation state adjustment [13] |

| DataWarrior [13] | Chemoinformatics Software | Physicochemical property calculation | Calculation of MW, ClogP, PSA, HBD, HBA [13] |

| ECFP4 Fingerprints [13] | Molecular Representation | Chemical structure description | Chemical space visualization and diversity analysis [13] |

| t-SNE [13] | Dimensionality Reduction Algorithm | Visualization of high-dimensional data | Mapping chemical space of natural product databases [13] |

| SPINUS [9] | Spectral Prediction Tool | NMR chemical shift prediction | QSDAR model development for bioactivity prediction [9] |

| Bemis-Murcko Scaffolds [13] | Structural Analysis Method | Identification of molecular frameworks | Scaffold diversity analysis and privileged structure identification [13] |

Figure 2: Relationship Between Database Types, Analysis Methods, and Applications

The expanding ecosystem of public natural product databases represents a critical infrastructure for modern drug discovery and chemoinformatic research. Global resources like COCONUT provide unprecedented coverage of natural product space, while regional collections such as LANaPDB and Nat-UV DB capture unique chemical diversity from biodiversity-rich areas. Together, these complementary resources enable researchers to navigate the complex chemical multiverse of natural products through standardized chemoinformatic characterization methods.

Future developments in this field will likely include increased integration of artificial intelligence for predictive modeling, expanded community curation efforts, enhanced spectral-structure-activity relationships, and greater emphasis on standardized metadata annotation including geographical origin, ecological context, and traditional use information. As these databases continue to grow and evolve, they will play an increasingly vital role in bridging traditional knowledge with modern computational approaches to drug discovery, ultimately accelerating the identification of novel therapeutic agents from nature's chemical repertoire.

Analyzing the Unique Physicochemical Properties of Natural Products

Natural products (NPs) remain one of the most prolific sources of inspiration for modern drug discovery, with approximately two-thirds of all small-molecule drugs approved between 1981 and 2019 being directly or indirectly derived from NPs [16]. Between 1981 and 2014 alone, over 50% of newly developed drugs were based on natural products [17]. These compounds, evolved over millions of years through natural selection, possess distinctive chemical structures that contribute to their biological activities across various therapeutic areas [18]. The structural complexity, diverse carbon skeletons, and varied stereochemistry of NPs represent attractive starting points for addressing complex diseases and emerging drug targets [18] [16].

The cheminformatic analysis of natural product libraries has become increasingly important as researchers seek to characterize, profile, and leverage the unique physicochemical properties of these compounds systematically. Computational approaches now play a vital role in organizing NP data, interpreting results, generating and testing hypotheses, filtering large chemical databases before experimental screening, and designing experiments [18]. This technical guide provides an in-depth examination of the unique physicochemical properties of natural products, methodologies for their analysis, and their implications for drug discovery, framed within the broader context of chemoinformatic analysis of natural product libraries.

Comparative Analysis of Key Physicochemical Properties

Natural products occupy a distinctive region of chemical space compared to synthetic compounds (SCs) and approved drugs. This section provides a quantitative analysis of their fundamental physicochemical properties, supported by data extracted from recent chemoinformatic studies.

Molecular Size and Complexity

Table 1: Molecular Size Descriptors of Natural Products vs. Reference Compound Sets

| Compound Set | Molecular Weight (Da) | Heavy Atom Count | Number of Bonds | Molecular Volume | Molecular Surface Area |

|---|---|---|---|---|---|

| Natural Products | 386.1 [19] | 27.8 [19] | 30.5 [19] | 378.4 [19] | 485.2 [19] |

| Synthetic Compounds | 312.7 [19] | 22.4 [19] | 23.9 [19] | 298.1 [19] | 402.3 [19] |

| Approved Drugs | ~350 [18] | - | - | - | - |

| GRAS Flavors | ~150 [20] | - | - | - | - |

Recent time-dependent analyses reveal that NPs discovered over time have shown a consistent increase in molecular size, with contemporary NPs being significantly larger than their historical counterparts and synthetic compounds [19]. This trend can be attributed to technological advancements in separation, extraction, and purification that enable scientists to identify larger compounds more easily. The structural complexity of NPs extends beyond mere size, manifesting in their intricate ring systems and stereochemistry.

Ring Systems and Structural Frameworks

Table 2: Ring System Analysis of Natural Products vs. Synthetic Compounds

| Ring System Parameter | Natural Products | Synthetic Compounds |

|---|---|---|

| Total Number of Rings | 4.2 [19] | 2.8 [19] |

| Aromatic Rings | 0.9 [19] | 1.7 [19] |

| Non-aromatic Rings | 3.3 [19] | 1.1 [19] |

| Ring Assemblies | 1.4 [19] | 1.8 [19] |

| Glycosylation Ratio (%) | 18.5 [19] | 2.1 [19] |

NP ring systems are larger, more diverse, and more complex than those of SCs [19]. The increasing number of rings in recently discovered NPs, particularly non-aromatic rings, suggests a trend toward more complex fused ring systems (such as bridged rings and spiral rings). SCs are distinguished by a greater involvement of aromatic rings, attributable to the prevalent utilization of aromatic compounds such as benzene in their synthesis [19].

Polarity, Flexibility, and Drug-Likeness

Table 3: Polarity, Flexibility and Drug-Like Properties

| Property | Natural Products | Synthetic Compounds | Approved Drugs | GRAS Compounds |

|---|---|---|---|---|

| LogP | 2.8 [19] | 3.2 [19] | 2.5 [20] | 2.5 [20] |

| Topological Polar Surface Area (Ų) | 118.4 [19] | 85.2 [19] | ~90 [18] | ~40 [20] |

| Hydrogen Bond Donors | 3.1 [19] | 1.8 [19] | - | - |

| Hydrogen Bond Acceptors | 5.9 [19] | 4.1 [19] | - | - |

| Rotatable Bonds | 5.2 [19] | 4.3 [19] | - | ~2 [20] |

The lipophilicity profile of NPs is comparable to approved drugs, a key property for predicting human bioavailability [20]. NPs generally exhibit higher polarity metrics (TPSA, HBD, HBA) compared to synthetic compounds, reflecting their evolutionary optimization for biological interactions. GRAS flavoring substances are notably smaller, less polar, and less flexible compared to other compound classes, though their AlogP profile closely matches that of approved drugs [20].

Experimental Protocols for Cheminformatic Analysis

Property Calculation and Profiling

Protocol 1: Calculation of Fundamental Molecular Descriptors

Data Preparation: Standardize chemical structures using tools such as the ChEMBL chemical curation pipeline [21]. This includes checking and validating chemical structures, standardizing based on FDA/IUPAC guidelines, and generating parent structures by removing isotopes, solvents, and salts.

Descriptor Calculation: Compute key physicochemical properties using cheminformatics toolkits:

- Utilize RDKit or CDK for calculating molecular weight, octanol/water partition coefficient (SlogP), topological polar surface area (TPSA), hydrogen bond donors (HBD), hydrogen bond acceptors (HBA), and number of rotatable bonds (RB) [18].

- Apply algorithms for molecular volume and surface area calculations using tools like Canvas software [20].

Statistical Analysis: Generate distribution profiles for each property using box-and-whisker plots to visualize median values, quartiles, and outliers across different compound collections [20].

Protocol 2: Ring System Analysis

- Ring Identification: Implement graph-based algorithms to identify all rings in molecular structures.

- Ring Classification: Categorize rings as aromatic, aliphatic, or heterocyclic based on atom types and bond properties.

- Assembly Determination: Identify ring assemblies (connected systems of rings) using fragmentation algorithms such as the Bemis-Murcko approach [19].

- Glycosylation Analysis: Calculate glycosylation ratios by identifying sugar moieties and their attachment to aglycone structures.

Chemical Space Visualization and Diversity Analysis

Protocol 3: Chemical Space Mapping Using Principal Component Analysis (PCA)

Descriptor Matrix Construction: Compile a comprehensive set of molecular descriptors for all compounds in the analysis (typically 30-50 descriptors including physicochemical properties, topological indices, and electronic parameters).

Data Preprocessing: Standardize descriptors to have zero mean and unit variance to prevent dominance by high-magnitude descriptors.

Dimensionality Reduction: Perform PCA using established algorithms to reduce the descriptor matrix to 2 or 3 principal components while retaining maximum variance.

Visualization: Project the compounds into the principal component space and color-code by compound class (NPs, SCs, drugs) to visualize overlap and distinction in chemical space [22].

Figure 1: Workflow for Chemical Space Analysis of Natural Product Libraries

Protocol 4: Scaffold-Based Diversity Analysis

Molecular Scaffold Generation: Extract molecular frameworks using the Bemis-Murcko method, which reduces molecules to their core ring systems and linkers [19].

Scaffold Frequency Analysis: Calculate the prevalence of each unique scaffold within compound collections.

Scaffold Tree Construction: Organize scaffolds hierarchically based on structural similarity and complexity using scaffold tree algorithms [22].

Diversity Metrics Calculation: Quantify scaffold diversity using measures such as scaffold diversity index (number of unique scaffolds divided by total compounds) and Gini coefficient to assess distribution uniformity.

Advanced Analytical Approaches

Temporal Analysis of Structural Evolution

- NPs have become larger, more complex, and more hydrophobic over time, exhibiting increased structural diversity and uniqueness [19].

- The glycosylation ratios of NPs and mean values of sugar rings in each glycoside have increased gradually over time [19].

- SCs exhibit a continuous shift in physicochemical properties, yet these changes are constrained within a defined range governed by drug-like constraints, and SCs have not fully evolved in the direction of NPs [19].

In Silico ADME/Tox Profiling

Computational prediction of absorption, distribution, metabolism, excretion, and toxicity (ADME/Tox) properties is crucial for prioritizing NPs for experimental testing:

- Rule-Based Filters: Apply established rules like Lipinski's Rule of Five and related criteria to assess drug-likeness [18].

- Machine Learning Models: Utilize trained classifiers on large bioactivity datasets (e.g., ChEMBL) to predict specific ADME/Tox endpoints [16].

- Fragment-Based Analysis: Decompose NPs into molecular fragments and compare with libraries of problematic structural motifs associated with toxicity [18].

Natural Product-Likeness Scoring

The natural product-likeness score evaluates how closely a molecule resembles known natural products based on structural features:

- Fragment Identification: Generate atom-centered fragments or larger substructures for each molecule.

- Probability Calculation: Calculate the probability of each fragment occurring in NPs versus synthetic compounds using Bayesian models.

- Score Computation: Combine fragment probabilities into a composite natural product-likeness score using established algorithms like NP-Score [21].

This approach has enabled the generation and validation of virtual libraries of natural product-like compounds, such as the database of 67 million NP-like molecules created via molecular language processing [21].

Table 4: Key Research Resources for Natural Product Cheminformatics

| Resource Category | Specific Tools/Databases | Key Functionality | Application in NP Research |

|---|---|---|---|

| NP Databases | COCONUT [17] [23] | >400,000 open NPs with structures and annotations | Primary source of NP structures for analysis |

| Natural Products Atlas [23] | Curated database of microbial NPs | Comparative analysis of bacterial/fungal NPs | |

| Super Natural II [16] | >325,000 NPs with predicted activities | Virtual screening and target prediction | |

| Analysis Software | RDKit [16] [21] | Open-source cheminformatics toolkit | Descriptor calculation, fingerprint generation |

| CDK [16] | Chemistry Development Kit | Basic cheminformatics operations | |

| Canvas [20] | Commercial software package | Property calculation, similarity searching | |

| Specialized Tools | NPClassifier [21] | Deep learning-based NP classification | Categorizing NPs by pathway/structural class |

| NP-Score [21] | Natural product-likeness scoring | Quantifying resemblance to known NPs | |

| Commercial Libraries | AnalytiCon Discovery [24] | Natural compounds from microbial/terrestrial sources | Source of physical samples for validation |

| NCI Natural Products Repository [24] | >230,000 crude extracts and purified NPs | Experimental screening materials |

Cheminformatic analysis reveals that natural products possess unique physicochemical properties that distinguish them from synthetic compounds and approved drugs. Their structural complexity, diverse ring systems, and distinct polarity profiles contribute to their success as sources of bioactive compounds for drug discovery. The experimental protocols and resources outlined in this technical guide provide researchers with comprehensive methodologies for characterizing these properties systematically.

Future directions in the field include the increased application of deep generative models to explore novel natural product chemical space, as demonstrated by the generation of 67 million natural product-like compounds using recurrent neural networks [21]. Temporal analysis of structural variations will continue to provide insights into the evolution of natural products and their influence on synthetic compound design. As natural product databases grow and computational methods advance, cheminformatic approaches will play an increasingly vital role in bridging the gap between the structural uniqueness of natural products and their development as therapeutic agents.

Molecular Scaffolds and Core Chemotypes in Natural Product Libraries

Natural products (NPs) and their distinctive molecular scaffolds represent an invaluable resource in drug discovery, historically serving as the source for a significant proportion of approved therapeutics. Notably, 60% of cancer drugs and 75% of infectious disease drugs are derived from natural products [25]. This efficacy is attributed to the evolutionary selection processes that shape natural products, often endowing them with superior biological relevance and pharmacokinetic properties compared to purely synthetic compounds [25]. Within the broader thesis of chemoinformatic analysis of natural product libraries, this whitepaper examines the core molecular scaffolds and chemotypes inherent to NPs. It details the methodologies for their systematic identification, comparative analysis against synthetic libraries, and integration into modern fragment-based drug discovery pipelines, providing a technical guide for researchers and drug development professionals.

The field is being transformed by the availability of large-scale, open-access databases and sophisticated open-source chemoinformatic tools. These resources enable the rigorous, reproducible, and data-driven exploration of natural product chemical space, moving beyond anecdotal evidence to a comprehensive understanding of their scaffold diversity and uniqueness [26].

Chemoinformatic Definitions and Foundational Concepts

Molecular Representations for Scaffold Analysis

Accurate scaffold analysis is predicated on the robust representation of chemical structures. Linear notations are essential for computational processing and storage.

- SMILES (Simplified Molecular Input Line System): An unambiguous text string that captures a molecule's structure using alphanumeric characters. Basic rules include representing atoms by their atomic symbols, denoting bonds with specific characters (

-,=,#), and using parentheses for branches. Canonical SMILES ensure a unique representation for each molecule, which is crucial for database management [27]. - SMARTS (SMILES Arbitrary Target Specification): An extension of SMILES used to specify substructural patterns for substructure search and reaction transformation rules. It employs logical operators and special symbols to create flexible queries for identifying specific scaffolds or functional groups [27].

- InChI (International Chemical Identifier): A non-proprietary, standardized identifier developed by IUPAC and NIST. Its key advantage is resolving chemical ambiguities related to stereochemistry and tautomerism through a layered structure, making it ideal for linking data across diverse compilations and ensuring precise compound identification [26] [27].

Defining Scaffolds and Chemotypes

In chemoinformatics, a "scaffold" is the core molecular framework of a compound. A common method is the Murcko framework decomposition, which systematically removes side chains and functional groups to reveal the central ring system and linker atoms [28]. A "chemotype" often refers to a broader structural class or pattern shared by a group of compounds, which can be defined using SMARTS patterns [27]. The analysis of these core structures enables researchers to categorize vast chemical libraries, assess diversity, and identify "privileged scaffolds"—structures that repeatedly appear in compounds with activity against multiple, unrelated biological targets [25].

Quantitative Landscape of Natural Product Fragment Libraries

Recent research has focused on generating comprehensive fragment libraries by computationally decomposing large natural product collections. These fragments, typically small and simple molecular pieces, are essential for fragment-based drug discovery (FBDD). The quantitative analysis of these libraries reveals the vast scaffold diversity present in nature.

Table 1: Comparative Overview of Publicly Available Fragment Libraries Derived from Natural Products and Synthetic Compounds

| Library Name | Source | Number of Unique Fragments | Source Collection Size | Key Characteristics |

|---|---|---|---|---|

| COCONUT-derived Fragment Library | Collection of Open Natural Products (COCONUT) | 2,583,127 [5] [29] | >695,133 non-redundant NPs [5] [29] | Extremely large-scale; represents a broad spectrum of global NP chemical space. |

| LANaPDB-derived Fragment Library | Latin America Natural Product Database (LANaPDB) | 74,193 [5] [29] | 13,578 unique NPs from Latin America [5] [29] | Focused on regional biodiversity; may contain unique chemotypes. |

| CRAFT Library | Novel heterocyclic scaffolds & NP-derived chemicals | 1,214 [5] [29] | Not specified | Curated for FBDD; based on distinct heterocyclic scaffolds and NP-derived chemicals. |

The data in Table 1 underscores the scale of chemical information available. The 2.5 million fragments from the COCONUT database provide an unprecedented resource for exploring the "fragment space" of natural products, offering a high probability of identifying novel and biologically relevant starting points for drug discovery [5] [29].

Methodological Framework for Comparative Chemoinformatic Analysis

A robust comparative analysis requires a multi-faceted approach, assessing compound libraries based on physicochemical properties, scaffold content, and structural fingerprints to gain a holistic view of their chemical space.

Multi-Criteria Comparison Framework

A multi-criteria comparison framework, as outlined in foundational chemoinformatics research, enables a comprehensive assessment of compound libraries [30]. This involves comparing the library of interest (e.g., a natural product fragment library) against reference sets such as known drugs, synthetic combinatorial libraries, and large screening repositories like the Molecular Libraries Small Molecule Repository (MLSMR) [30]. The analysis is built on three pillars:

- Physicochemical Properties: Calculation and comparison of key descriptors including Molecular Weight (MW), number of Rotatable Bonds (RB), Hydrogen Bond Acceptors (HBA), Hydrogen Bond Donors (HBD), Topological Polar Surface Area (TPSA), and the octanol/water partition coefficient (SlogP) [30].

- Scaffold and Framework Analysis: Identification and frequency analysis of molecular frameworks (Murcko scaffolds) to understand the diversity and "privileged" status of core structures [30].

- Fingerprint-Based Similarity: Use of molecular fingerprints (e.g., MACCS keys, Morgan fingerprints) to measure overall structural similarity and diversity within and between libraries [30].

Experimental Protocol: R-NN Curve Analysis for Spatial Overlap

A powerful method to quantify the overlap between a query library (e.g., NP fragments) and a target collection (e.g., drugs) is the R-NN Curve Analysis [30].

Objective: To determine whether the compounds in a query combinatorial or NP library are located in dense or sparse regions of a target collection's property space (e.g., drug space). Methodology:

- Descriptor Calculation: Compute a set of physicochemical descriptors (e.g., MW, SlogP, HBA, HBD, TPSA, RB) for all compounds in the target collection and the query library.

- Descriptor Scaling: Scale the descriptor values for the target dataset using its median and interquartile ranges. Apply the same scaling parameters to the query library.

- Spatial Indexing: Load the scaled descriptors of the target collection into a relational database (e.g., Postgres) with a spatial index (e.g., R-tree) for efficient nearest-neighbor search [30].

- R-NN Curve Generation: For each query molecule:

- Calculate the number of neighbors from the target collection lying within a sphere of radius R, where R ranges from 1% to 100% of the maximum pairwise distance in the target collection.

- Plot the number of neighbors versus R, generating a sigmoidal R-NN curve.

- Rmax(S) Calculation: For each query molecule, determine the Rmax(S) value—the radius R at which the lower tail of the R-NN curve transitions to the exponentially increasing region. A small Rmax(S) indicates the molecule is in a dense region of the target space; a large value indicates a sparse region.

- Interpretation: Plotting the Rmax(S) values for the entire query library provides an intuitive summary of its spatial distribution relative to the target. A library with many high Rmax(S) values contains numerous novel scaffolds residing in underexplored regions of chemical space [30].

Essential Tools and Research Reagent Solutions

The modern chemoinformatic analysis of natural product scaffolds relies on a suite of open-source software and open-access data resources that promote reproducibility and collaboration.

Table 2: The Scientist's Toolkit: Key Platforms and Resources for NP Scaffold Analysis

| Tool / Resource Name | Type | Primary Function in NP Analysis | Key Feature |

|---|---|---|---|

| RDKit [28] | Open-Source Cheminformatics Library | Molecular I/O, descriptor calculation, fingerprint generation, scaffold decomposition, and similarity search. | Extensive Python API; integrates with machine learning workflows; includes PostgreSQL cartridge for large-scale search. |

| QSPRpred [31] | Open-Source QSPR Modelling Tool | Building predictive models for properties/activity based on NP scaffolds; includes data curation and model serialization. | Automated serialization of entire preprocessing and modeling pipeline for full reproducibility. |

| KNIME [31] [27] | Visual Workflow Platform | Building reproducible, GUI-driven workflows for library enumeration, filtering, and analysis without extensive coding. | Integrates RDKit nodes and data processing components; user-friendly visual interface. |

| COCONUT [5] [29] | Open Natural Products Database | Primary source for natural product structures to generate fragment libraries and identify novel scaffolds. | One of the largest open NP collections; freely available. |

| LANaPDB [5] [29] | Natural Product Database | Source for Latin American natural product structures, enabling discovery of region-specific chemotypes. | Focused on regional biodiversity. |

| PubChem [26] | Open Chemical Repository | Source of bioactivity data and synthetic compound structures for comparative analysis. | Massive, integrated public database. |

| InChI [26] [27] | Standardized Identifier | Provides a unique, standard identifier for each NP scaffold to enable unambiguous data integration across sources. | Resolves tautomerism and stereochemistry ambiguities. |

Visualization and Navigation of NP Chemical Space

With NP libraries encompassing millions of fragments, visualizing the occupied chemical space is a critical step in identifying clusters and outliers. Dimensionality reduction techniques like Principal Component Analysis (PCA) are applied to physicochemical properties to create 2D or 3D maps of chemical space [30] [32]. These maps allow researchers to visually assess the overlap and uniqueness of NP libraries compared to synthetic libraries or drugs.

Emerging methods are addressing the "Big Data" challenge in cheminformatics. Deep learning models are now being used not only for prediction but also for generative purposes and for creating intuitive visual maps of chemical space. These maps can be used for the visual validation of QSAR models and the analysis of complex activity landscapes, helping to guide the selection of NP scaffolds for further investigation [32].

The systematic chemoinformatic analysis of molecular scaffolds and core chemotypes in natural product libraries confirms their immense value as a source of structurally diverse and biologically pre-validated starting points for drug discovery. The availability of large open databases like COCONUT and powerful, open-source toolkits like RDKit and QSPRpred has democratized this analysis, enabling a data-driven approach to exploring natural product chemical space [5] [26].

Future research directions in this field are being shaped by artificial intelligence and open science. Key trends include the increased use of deep generative models for the de novo design of natural product-like scaffolds [32], the application of proteochemometric modeling to understand scaffold-target relationships across protein families [31], and a growing emphasis on FAIR (Findable, Accessible, Interoperable, Reusable) data principles to ensure the reproducibility and sustainability of research outputs [26]. The integration of these advanced methodologies will further solidify the role of natural product scaffolds in addressing unmet medical needs through rational drug design.

The Concept of Chemical Space and Diversity in Natural Product Collections

The concept of chemical space provides a powerful theoretical framework for understanding and organizing molecular diversity. In cheminformatics, chemical space is defined as "a concept to organize molecular diversity by postulating that different molecules occupy different regions of a mathematical space where the position of each molecule is defined by its properties" [33]. For natural products (NPs), this conceptual space encompasses the vast array of chemical compounds produced by living organisms, including plants, marine organisms, and microorganisms. Natural products occupy a privileged position in this chemical universe, displaying high structural diversity and complexity that distinguishes them from synthetic compounds [34]. The chemical space of NPs is not merely theoretical; it represents a fundamental resource for drug discovery, with over half of approved small-molecule drugs originating directly or indirectly from natural products [34].

The systematic exploration of NP chemical space faces unique challenges due to the distinctive characteristics of these compounds. NPs frequently exhibit complex structural features, including glycosylation and halogenation patterns, and they often possess higher molecular complexity than synthetic compounds [34]. Understanding the organization and diversity within NP chemical space requires sophisticated chemoinformatic approaches that can quantify, visualize, and compare molecular properties across different biological sources, from terrestrial plants to deep-sea extremophiles [34]. This technical guide explores the core concepts, methodologies, and applications of chemical space analysis specifically for natural product collections, providing researchers with the analytical framework needed to navigate this complex chemical landscape.

Defining Chemical Space and Diversity in the Context of Natural Products

Fundamental Concepts and Metrics

Chemical space is a multidimensional construct where each dimension represents a specific molecular property or descriptor. The position of any molecule within this space is determined by its unique combination of these properties [33]. For natural products, relevant dimensions include structural features (e.g., ring systems, functional groups), physicochemical properties (e.g., molecular weight, lipophilicity), and topological descriptors (e.g., molecular fingerprints) [34] [22]. The concept of a "consensus chemical space" that combines multiple representations has emerged as a promising approach to capture the complexity of NPs more comprehensively [33].

Chemical diversity refers to the degree of variation in molecular structures and properties within a compound collection. Quantitative assessment of this diversity employs similarity indices, with the Tanimoto similarity being particularly well-established due to its consistent performance in structure-activity relationship studies [33]. The intrinsic similarity (iSIM) framework provides an efficient method to quantify the internal diversity of large compound sets by calculating the average of all distinct pairwise Tanimoto comparisons with O(N) computational complexity, enabling analysis of massive NP datasets that would be prohibitive with traditional O(N²) approaches [33].

Distinctive Characteristics of Natural Product Chemical Space

Natural products occupy broader and more diverse regions of chemical space compared to synthetic compounds [34]. Analysis of over 1.1 million documented NPs reveals several distinctive characteristics. NPs display high structural diversity and complexity, frequently featuring glycosylation and halogenation patterns [34]. They exhibit clear differentiation based on biological origin; for instance, marine NPs tend to be larger and more hydrophobic than their terrestrial counterparts [34]. NPs from extreme environments, such as deep-sea ecosystems, often contain novel scaffolds with unique bioactivities [34].

Despite this structural richness, NP research faces significant challenges. The discovery rate of novel NP structures is declining, suggesting potential exhaustion of easily accessible sources [34]. Furthermore, only approximately 10% of known NPs are readily purchasable, and redundancy in known scaffolds presents a major bottleneck in NP-based drug discovery [34]. These limitations highlight the need for more sophisticated approaches to identify and explore underrepresented regions of NP chemical space.

Table 1: Key Characteristics of Natural Product Chemical Space Compared to Synthetic Compounds

| Property | Natural Products | Synthetic Compounds |

|---|---|---|

| Structural Diversity | High structural diversity and complexity [34] | Lower complexity, more uniform [34] |

| Common Features | Frequent glycosylation and halogenation [34] | Less common functionalization patterns [34] |

| Chemical Space Coverage | Broader, more diverse regions [34] | More restricted to drug-like space [34] |

| Marine vs. Terrestrial | Marine NPs larger and more hydrophobic [34] | Not applicable |

| Accessibility | Only ~10% purchasable [34] | Generally highly accessible [34] |

Quantitative Analysis of Natural Product Chemical Space

Analytical Frameworks and Diversity Metrics

The quantitative analysis of NP chemical space employs specialized computational frameworks designed to handle the complexity and scale of NP collections. The iSIM (intrinsic similarity) framework enables efficient quantification of chemical diversity within large NP datasets by calculating the average of all distinct pairwise Tanimoto similarities with O(N) computational complexity, bypassing the steep O(N²) cost of traditional pairwise comparisons [33]. This approach calculates the iSIM Tanimoto (iT) value using the formula:

iT = ∑[ki(ki-1)/2] / ∑{[ki(ki-1)/2] + ki(N-ki)}

where k_i represents the number of "on" bits in the i-th column of the fingerprint matrix, and N is the total number of molecules [33]. Lower iT values indicate more diverse compound collections, providing a global diversity metric for NP libraries.

Complementary similarity analysis extends this framework to identify central (medoid-like) and peripheral (outlier) molecules within the chemical space [33]. Molecules with low complementary similarity values are central to the library's diversity, while those with high values represent structural outliers. This approach enables researchers to map the distribution of compounds within chemical space and identify regions of structural uniqueness or redundancy.

Comparative Analysis of Natural Product Databases

Current databases document over 1.1 million natural products, but the distribution and diversity of these compounds vary significantly across different biological sources and geographical origins [34]. Systematic analysis reveals distinct chemical profiles for NPs from different environments. For example, the Seaweed Metabolite Database (SWMD) contains compounds with distinct chemical spaces compared to terrestrial NPs, reflecting their different evolutionary pressures and biosynthetic pathways [34].

Regional NP databases, such as the Peruvian Natural Products Database (PeruNPDB) and the Ethiopian Traditional Herbal Medicine and Phytochemicals Database (ETM-DB), capture region-specific chemical diversity that may be absent from global collections [34]. The recently updated Collection of Open Natural Products (COCONUT) contains over 695,000 non-redundant natural products, while the Latin America Natural Product Database (LANaPDB) includes 13,578 unique NPs from Latin America [5]. Fragment-based analysis of these collections has generated 2,583,127 fragments from COCONUT and 74,193 fragments from LANaPDB, enabling more detailed exploration of specific regions of chemical space [5].

Table 2: Major Natural Product Databases and Their Characteristics

| Database Name | Number of Compounds | Special Features | Key Applications |

|---|---|---|---|

| COCONUT | >695,000 non-redundant NPs [5] | Comprehensive open collection | Fragment analysis, diversity assessment [5] |

| Dictionary of Natural Products | Not specified | Extensive commercial database | Chemical space analysis, trend assessment [34] |

| LANaPDB | 13,578 unique NPs [5] | Latin American natural products | Region-specific diversity studies [5] |

| PeruNPDB | Not specified | Peruvian natural products | In silico drug screening [34] |

| Seaweed Metabolite Database (SWMD) | Not specified | Marine natural products | Cheminformatic analysis of marine NPs [34] |

Methodologies for Chemical Space Visualization and Analysis

Dimensionality Reduction Techniques

Visualization of high-dimensional chemical space requires dimensionality reduction techniques that project molecular descriptors onto two-dimensional or three-dimensional maps while preserving meaningful relationships. Principal Component Analysis (PCA) performs linear transformation of data into principal components, providing a fast and well-studied approach, though its linear nature limits its ability to handle complex nonlinear structures in chemical space [35]. Non-linear methods often provide superior visualization for complex NP datasets.

T-distributed Stochastic Neighbor Embedding (t-SNE) minimizes the Kullback-Leibler divergence between high- and low-dimensional statistical distributions, effectively preserving local structure and generating distinct clusters of similar compounds [35]. Parametric t-SNE enhances this approach by employing an artificial neural network as a deterministic projector from high-dimensional descriptor space to a 2D visualization plane [35]. This determinism enables consistent mapping of new compounds into predefined regions of chemical space, facilitating reproducible navigation and analysis.

Additional visualization methods include Self-Organizing Maps (SOM), Generative Topographic Mapping (GTM), and Multidimensional Scaling (MDS), each with distinct advantages for specific analysis scenarios [35]. The Tree MAP (TMAP) method, based on graph theory, represents data as extensive tree structures and can process datasets exceeding 10 million compounds while maintaining both local and global chemical space structure [35].

Workflow for Chemical Space Analysis of Natural Product Collections

Figure 1: Chemical Space Analysis Workflow for Natural Products

The analytical workflow for NP chemical space analysis begins with data curation and standardization, which includes structure normalization, desalting, and removal of duplicates using canonical SMILES or InChI identifiers [27]. Molecular descriptor calculation transforms structural information into numerical representations, with common approaches including molecular fingerprints (e.g., ECFP, MACCS), physicochemical properties (e.g., molecular weight, logP), and structural descriptors (e.g., ring systems, functional group counts) [22] [35].

Dimensionality reduction techniques then project these high-dimensional descriptors onto 2D or 3D maps, with method selection dependent on dataset size and analysis goals [35]. For large NP collections (>100,000 compounds), TMAP or parametric t-SNE are recommended for their scalability and determinism [35]. The resulting chemical space maps enable cluster identification, diversity assessment, and visualization of structure-activity relationships through color coding and point sizing [32] [35].

Visual Validation of QSAR/QSPR Models

Chemical space visualization provides powerful approaches for visual validation of Quantitative Structure-Activity/Property Relationship (QSAR/QSPR) models [35]. By projecting compounds from validation sets onto chemical space maps and color-coding predictions or errors, researchers can identify regions where model performance deteriorates, revealing "model cliffs" where structurally similar compounds exhibit large prediction errors [35]. This visual validation approach complements numerical metrics by providing intuitive understanding of model applicability domains and failure modes, particularly important for complex NP datasets where traditional applicability domain definitions may be insufficient [35].

Tools like MolCompass implement parametric t-SNE models specifically designed for visual validation, enabling researchers to systematically analyze model weaknesses across different regions of NP chemical space [35]. This approach is particularly valuable for regulatory applications of QSAR models, where understanding model limitations is essential for reliable risk assessment of natural products [35].

Experimental Protocols for Chemical Space Analysis

Protocol 1: Diversity Assessment Using iSIM Framework

Purpose: To quantify the intrinsic diversity of natural product collections using the iSIM framework.

Materials and Reagents:

- Natural product database (e.g., COCONUT, LANaPDB, or in-house collection)

- Computing environment with iSIM implementation

- Molecular fingerprint calculator (e.g., RDKit, CDK)

Procedure:

- Data Preparation: Curate the NP dataset by removing duplicates, standardizing structures, and validating chemical integrity. Use InChI keys for consistent duplicate identification [27].

- Fingerprint Generation: Calculate molecular fingerprints for all compounds. Extended-Connectivity Fingerprints (ECFP4) with 1024 bits are recommended for NP analysis [33].

- iSIM Calculation: Apply the iSIM algorithm to compute the intrinsic similarity (iT) value:

- Arrange all fingerprints in a matrix format

- Calculate column sums k_i for all M fingerprint bits

- Apply the iT formula: iT = ∑[ki(ki-1)/2] / ∑{[ki(ki-1)/2] + ki(N-ki)} [33]

- Interpretation: Lower iT values indicate higher diversity. Compare iT values across different NP subsets (e.g., by source organism, geographical origin) to identify particularly diverse collections.

- Complementary Analysis: Calculate complementary similarity values to identify medoid (central) and outlier (peripheral) compounds. Define medoids as molecules in the lowest 5th percentile and outliers as the highest 5th percentile of complementary similarity values [33].

Protocol 2: Chemical Space Visualization Using Parametric t-SNE

Purpose: To generate deterministic chemical space maps for natural product collections using parametric t-SNE.

Materials and Reagents:

- Curated NP dataset (>10,000 compounds recommended)

- MolCompass framework or custom parametric t-SNE implementation

- Python environment with cheminformatics libraries

Procedure:

- Descriptor Calculation: Compute molecular descriptors or fingerprints for all compounds. Morgan fingerprints with radius 2 and 1024 bits are suitable for parametric t-SNE [35].

- Model Training: Train a parametric t-SNE model using a feed-forward neural network:

- Network architecture: Input layer (descriptor dimension), 2 hidden layers (500 and 200 neurons), output layer (2 neurons for X,Y coordinates)

- Training parameters: Perplexity=30, learning rate=200, iterations=1000 [35]

- Projection: Use the trained model to project all compounds onto a 2D chemical space map. The neural network will deterministically map similar scaffolds to consistent regions [35].

- Visualization Enhancement: Apply clustering algorithms (e.g., BitBIRCH) to identify distinct compound clusters. Color-code points by structural class, biological source, or predicted activity [33] [35].

- Map Interpretation: Analyze cluster formation to identify scaffold-rich regions, structural outliers, and diversity gaps. Use interactive visualization tools to explore compound relationships [35].

Protocol 3: Time-Evolution Analysis of NP Chemical Space

Purpose: To analyze how the chemical diversity of natural product databases evolves over time.

Materials and Reagents:

- Sequential versions of NP databases (e.g., different releases of COCONUT or Dictionary of Natural Products)

- iSIM and BitBIRCH algorithms

- Computational resources for large-scale similarity calculations

Procedure:

- Data Collection: Obtain multiple historical versions of the target NP database. Ensure consistent curation and standardization across all versions [33].

- Diversity Trend Analysis: Calculate iT values for each database version to track global diversity changes over time. Plot iT versus release date to visualize diversity trends [33].

- Cluster Evolution Analysis: Apply BitBIRCH clustering to each database version:

- Use Tanimoto similarity threshold of 0.7 for fingerprint comparison

- Track formation and growth of clusters across versions

- Identify newly emerging scaffold classes [33]

- Set Jaccard Analysis: Calculate Jaccard similarity between successive versions: J(Lp,Lq) = |Lp ∩ Lq| / |Lp ∪ Lq|, where Lp and Lq represent library sectors from different years [33].

- Interpretation: Identify which database releases contributed most to diversity expansion and map the exploration of new regions in chemical space over time [33].

Essential Research Reagents and Computational Tools

Table 3: Essential Research Reagents and Computational Tools for NP Chemical Space Analysis

| Tool/Reagent | Type | Function | Application in NP Research |

|---|---|---|---|

| iSIM Framework | Computational algorithm | Quantifies intrinsic diversity of compound collections | Diversity assessment of large NP databases [33] |

| BitBIRCH | Clustering algorithm | Efficient clustering of large chemical datasets | Identifying scaffold classes in NP collections [33] |